List out the challenges of machine learning/ artificial intelligence for delivering clinical impact

November 13, 2020

An overview of Evidence-based medicine vs precision medicine in comparison with their limitations

November 25, 2020In-Brief

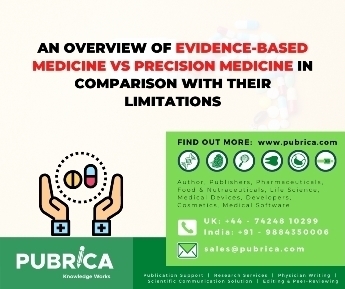

- Over a decade, the “Big data” showcases the rapid increase in variety and volumeof information, particularly in medical research.

- As scientists, rapidly generate, store and analyze data that would have taken many years to compile. “Big data” means expanded and large data volume, possess increasing ability to analyze and interpret those data.

- Each data can benefit from the other, and it can improve clinical practice is explained briefly in pubrica blog for Clinical biostatistics services

Introducing big data

Advancements in digital technology have created to develop the ability to multiplex measurements on a single sample. It may provide in hundreds, thousands or even millions of sizes being produced concurrently, always combining technologies to give rapid measures of DNA,protein, RNA, function along with the clinical features including measures of disease, progression and related metadata. “Big data” is best considered of its purpose. The ultimate characteristic of such experimental approaches is not the vast scale of measurement but the hypothesis-free method to the experimental design. In this blog, we define “Big data” experiments as hypothesis-generating rather than hypothesis-driven studies. They inevitably involve rapid measurement of many variables and are typically “Bigger” than their counterparts driven by a prior hypothesis. They probe the unknown workings of complex systems: if we can measure it all and do so in an attempt to describe it, maybe we can understand it all. This approach is less dependent on prior information and has more significant potential to indicate unsuspected pathways relevant to disease in biostatistics consulting services

In contrast, others argued that new techniques were an irrelevant distraction from established methods. With history, it is clear that neither extreme was accurate. Hypothesis-generating systems are not only synergistic with traditional methods, but they are also dependent upon them. In this way, Big data analyses are useful to ask novel questions, with conventional experimental techniques remaining just as relevant for testing them by using Statistical Programming Services

Development of big data

The development of Big data has drastically approaching to enhance our ability to probe the “parts” of biology may be defective. The goal of precision medicine aims leads the approach one step by making that information of practical value to the clinician. Precision medicine can be briefly defined as an approach to provide the right treatments to the right patients at the right time. For most clinical problems, precision strategies remain yearning. The challenge of reducing biology to its parts, then analyzing which must be measured to choose an optimal intervention, the patient population will get benefits. Still, the increasing use of hypothesis-free, Big data approaches promises to help us reach this aspirational goal using medical biostatistical Services

Artificial intelligence vs big data analytics

The health care improvements brought by the application of Big data techniques in are still, mostly, yet to transform into clinical practice, the possible benefits of doing so can be seen in those clinical areas already with large, readily available and usable data sets. One such place is in clinical imaging for biostatistics for clinical research where data is invariably digitized and housed in dedicated picture archiving systems. Also, this imaging data is connected with clinical data in the form of image reports, the electronic health record and also carries its extensive data. Due to the ease of handling of this data, it has been easy to show, that artificial intelligence via machine learning techniques, can exploit big data to provide clinical benefit at least experimentally. The requirement of the computing techniques in part reflects the need to extract hidden information from images which are not readily available from the original datasets. These techniques are opposite to parametric data within the clinical record, including physiological readings such as pulse rate or results from blood tests or blood pressure. The need for similar data processing in digitized pathology image specimens is present with the help of biostatistics consulting firms.

Big data may provide annotated data sets to be used to train artificial intelligence algorithms to recognize clinically relevant conditions or features. For the algorithm to learn the relevant parts, which are not pre-programmed, significant numbers of cases with the element or disease under scrutiny are required. Subsequently, similar, but different large volumes of patients to test the algorithm against standard gold annotations. After they are trained to an acceptable level, these techniques have the opportunity to provide pre-screening of images with a high likelihood of diseaseto look for cases, allowing prioritization of formal reading. The Screening tests such as breast mammography will undergo pre-reading by artificial intelligence/machine learning to identify the few positive issues among many regular studies allowing rapid identification. Pre-screening of the complex in high acuity cases allows a focused approach to identify and review areas of concern Quantification of structures within a medical image such as tumour volume, monitoring growthor cardiac ejection volume or response to therapy, or following heart attack,to manage drug therapy of heart failure will be incorporated into artificial intelligence algorithms. They are undertaken automatically rather than requiring detailed segmentation of the structures obtained from the statistics in clinical trials

The artificial intelligence continues to improve, and it can recognize image features regardless of any pre-training through the significances of artificial and convolutional neural networks which can assimilate different sets of medical data. The resulting algorithms will be applied to similar, new clinical information to predict individual patient responses based on large prior patient cohorts. Alternatively, similar techniques can be used for images to identify subpopulations that are otherwise very complex tolocate. The artificial intelligence may find a role in hypothesis production by identifying, unique image features or a combination of components or unrecognized image that relate to disease outcome. A subset of patients with loss of memory that potentially performs to dementia may have features detectable before symptom development. This approach allows massive volume population interrogation with prospective clinical follow-up and identification of the most clinically relevant image fingerprints, rather than analyzing retrospective data in patients already having the degenerative brain disease/disorder.

Even after the vast wealth of data contained in the clinical information technology systems within hospitals, the extraction of medical usage data from the clinical domain is not a trivial task, for several diverse reasons including philosophy of data handling, the data format, biological data handling infrastructure and transformation of new advances into the clinical domain. These problems address before the successful application of these new methodologies using biostatistics in clinical trials.

Conclusion

The field of biomedical research has seen a detonation in recent years, with a variety of information available, that has collectively known as “Big data.” It is a hypothesis-generating method to science best in consideration, but rather a complementary means of identifying and inferring meaning from patterns in data. An increasing range of “artificial intelligence” methods allow these patterns to be directly learned from the data itself, rather than pre-specified by researchers depending on prior knowledge. Together, these advances are cause for significant development in medical sectors with the biostatistics Support Services in Pubrica.

References

- Hulsen, T., Jamuar, S. S., Moody, A. R., Karnes, J. H., Varga, O., Hedensted, S., … & McKinney, E. F. (2019). From big data to precision medicine. Frontiers in Medicine, 6, 34.

- Zikopoulos, P., & Eaton, C. (2011). Understanding big data: Analytics for enterprise-class Hadoop and streaming data. McGraw-Hill Osborne Media.