- Services

- Discovery & Intelligence Services

- Publication Support Services

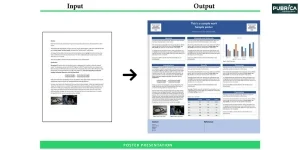

- Sample Work

Publication Support Service

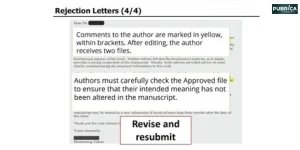

- Editing & Translation

-

Editing and Translation Services

- Sample Work

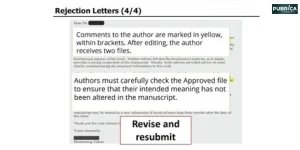

Editing and Translation Service

-

- Research Services

- Sample Work

Research Services

- Physician Writing

- Sample Work

Physician Writing Service

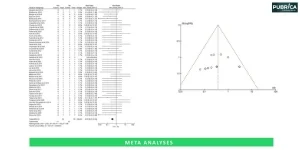

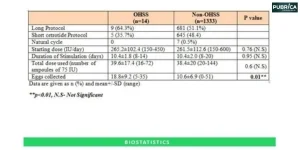

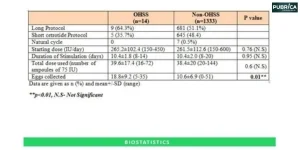

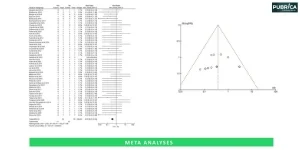

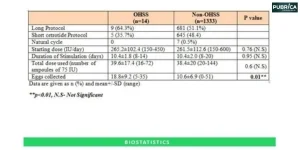

- Statistical Analyses

- Sample Work

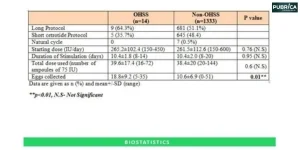

Statistical Analyses

- Data Collection

- AI and ML Services

- Medical Writing

- Sample Work

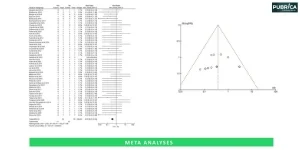

Medical Writing

- Research Impact

- Sample Work

Research Impact

- Medical & Scientific Communication

- Medico Legal Services

- Educational Content

- Industries

- Subjects

- About Us

- Academy

- Insights

- Get in Touch

- Services

- Discovery & Intelligence Services

- Publication Support Services

- Sample Work

Publication Support Service

- Editing & Translation

-

Editing and Translation Services

- Sample Work

Editing and Translation Service

-

- Research Services

- Sample Work

Research Services

- Physician Writing

- Sample Work

Physician Writing Service

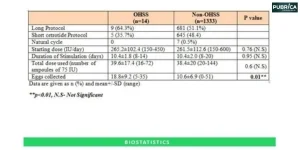

- Statistical Analyses

- Sample Work

Statistical Analyses

- Data Collection

- AI and ML Services

- Medical Writing

- Sample Work

Medical Writing

- Research Impact

- Sample Work

Research Impact

- Medical & Scientific Communication

- Medico Legal Services

- Educational Content

- Industries

- Subjects

- About Us

- Academy

- Insights

- Get in Touch

- Services

- Discovery & Intelligence Services

- Publication Support Services

- Sample Work

Publication Support Service

- Editing & Translation

-

Editing and Translation Services

- Sample Work

Editing and Translation Service

-

- Research Services

- Sample Work

Research Services

- Physician Writing

- Sample Work

Physician Writing Service

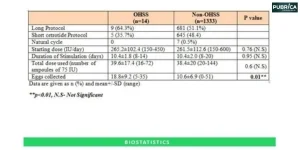

- Statistical Analyses

- Sample Work

Statistical Analyses

- Data Collection

- AI and ML Services

- Medical Writing

- Sample Work

Medical Writing

- Research Impact

- Sample Work

Research Impact

- Medical & Scientific Communication

- Medico Legal Services

- Educational Content

- Industries

- Subjects

- About Us

- Academy

- Insights

- Get in Touch

Introduction to Bayesian Statistics: Principles and Applications

- Home

- Publication Support

- Journal Selection

- Introduction to Bayesian Statistics: Principles and Applications

An Academy Guide

Interesting topics

Introduction to Bayesian Statistics: Principles and Applications

Bayesian statistics provides a structured approach to statistical inference that is based on the combination of prior knowledge and observed data to modulate beliefs about unknown parameters. Named in honour of Thomas Bayes, Bayesian methods are an alternative to the frequentist approach that relies only on the sample data.[1]

1. Bayesian Statistics

Bayesian statistics is a branch of statistics in which probabilities are interpreted as degrees of belief or certainty about events or parameters, rather than fixed frequencies. It relies on Bayes’ Theorem to update these beliefs as new evidence or data becomes available.[2]

Key Concepts

- Prior Probability (Prior): Initial belief about a parameter before seeing data.

- Likelihood: Probability of observed data given the parameter.

- Posterior Probability (Posterior): Updated belief about a parameter after observing data. Calculated using Bayes’ Theorem:

- Predictive Distribution: Probability of future data based on current knowledge.

2. Bayesian vs Frequentist Approaches

Feature | Bayesian | Frequentist |

Parameter interpretation | Random variable | Fixed value |

Prior knowledge | Used explicitly | Not used |

Result | Posterior probability | Point estimate & confidence interval |

Flexibility | High (hierarchical models, complex distributions) | Limited in complex models |

3. Advantages of Bayesian Statistics

- Includes prior knowledge and expert judgment

- Provides complete probability distributions (not only point estimates)

- Naturally accommodates small sample sizes

- Flexible modeling methods for complicated data.

4. Applications

- Medical research involves estimating treatment effectiveness using historical clinical studies before trials.

- In the field of machine learning, Bayesian inference supports algorithms like Naive Bayes and Bayesian Neural Networks.

- In economics and finance, prior economic data predicts trends in future markets.

Illustrative Example: Imagine a coin with unknown bias for heads. Prior belief: . After observing 8 heads in 10 flips:

Posterior: 0 / D ~ Beta (2 +8,2 + 2) = Beta (10,4)

5. Common Bayesian Methods

Common Bayesian methods include Bayesian: [3]

- Markov Chain Monte Carlo (MCMC): The technique of sampling from intricate posterior distributions. [4]

- Gibbs Sampling: The process of sequentially sampling each variable conditional on other variables.

- Variational Inference: An approximation method for large-scale Bayesian models

6. Limitations

- Use of computationally intensive methods for large datasets

- Requires careful selection of priors

- Interpretation may be sensitive to prior assumptions.

Conclusion

Bayesian statistics provides a coherent and flexible approach to statistical inference by combining prior knowledge and observed data. Its strength lies in producing full probability distributions, allowing better decision-making under uncertainty. With modern computational methods, Bayesian approaches are now widely applicable across science, engineering, and finance.

Introduction to Bayesian Statistics: Principles and Applications. Our Pubrica consultants are here to guide you. [Get Expert Publishing Support] or [Schedule a Free Consultation]

References

- van de Schoot, R., Kaplan, D., Denissen, J., Asendorpf, J. B., Neyer, F. J., & van Aken, M. A. G. (2014). A gentle introduction to bayesian analysis: applications to developmental research. Child development, 85(3), 842–860. https://doi.org/10.1111/cdev.12169

- Clyde, M., Çetinkaya-Rundel, M., Rundel, C., Banks, D., Chai, C., & Huang, L. (n.d.). Chapter 1 The Basics of Bayesian Statistics. Github.Io. Retrieved October 13, 2025, from https://statswithr.github.io/book/the-basics-of-bayesian-statistics.html

- Muehlemann, N., Zhou, T., Mukherjee, R., Hossain, M. I., Roychoudhury, S., & Russek-Cohen, E. (2023). A Tutorial on Modern Bayesian Methods in Clinical Trials. Therapeutic innovation & regulatory science, 57(3), 402–416. https://doi.org/10.1007/s43441-023-00515-3

- van Ravenzwaaij, D., Cassey, P., & Brown, S. D. (2018). A simple introduction to Markov Chain Monte-Carlo sampling. Psychonomic bulletin & review, 25(1), 143–154. https://doi.org/10.3758/s13423-016-1015-8