Machine Learning Screening Performance and Usability in Systematic Reviews

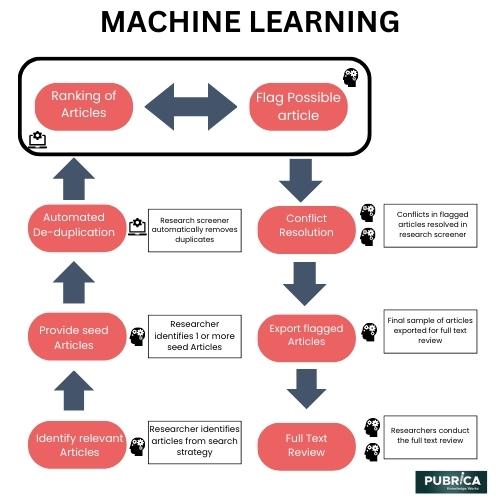

Machine learning has become an increasingly popular approach for screening studies in systematic reviews due to its potential to improve the efficiency and accuracy of the screening process. Performance and usability are important factors to consider when evaluating the effectiveness of machine learning for screening in systematic reviews.

Introduction

In terms of performance, studies have shown that machine learning algorithms can significantly reduce the time and effort required for screening. For example, one study found that using machine learning reduced the number of studies that needed to be screened by 57% while maintaining a sensitivity of 97% and specificity of 98%. Additionally, machine learning can also help identify relevant studies that might be missed by traditional screening methods, improving the comprehensiveness of the systematic review.

To keep up with the fast release of primary investigations, it is becoming clear that quicker systematic review (SR) methods are required. A review stage that may be particularly receptive to automation or semi-automation is the title and abstract screening. There is growing interested in how reviewers might use machine learning (ML) technologies to speed up screening while preserving SR validity. ML algorithms can speed up screening by estimating the relevance of remaining data after reviewers have screened a "training set." What is unknown is how and when reviewers will be able to rely on these predictions to semi-automate screening. Reviewers would benefit from learning about various technologies' relative dependability, usefulness, learnability, and prices.

Analysis

Performance

For analysis, they exported the simulation data from Excel to SPSS Statistics. Data from two cross-tabulations were also utilized to create standard performance measures for each simulation, as follows:

Strengths and limitations

This investigation evaluates the performance and user experiences of several machine learning techniques for screening in Systematic Reviews. (SRs). It answers an International Collaboration for Automation of Systematic Reviews call to trial, validates current technologies, and solves highlighted impediments to implementation. The training sets for each tool vary for each review, although Abstrackr's predictions did not differ significantly across three trials. They utilized a training set of 200 records and a small sample of three SRs. Time savings were projected based on the reduced screening burden and a typical screening rate. However, this estimate does not account for time spent debugging usability difficulties, variations in time spent screening records as reviewers progressed through the screening job, or rejected records compared to others.

Conclusion

Abstrackr's predictions lowered the frequency of missed studies by up to 90%; however, performance varied depending on SR. Distiller offered no benefit over screening by a single reviewer, but RobotAnalyst gave a marginal advantage. Using Abstrackr to supplement the work of a single screener may be appropriate in some circumstances, but more assessments are required before this strategy can be suggested. The technologies' usability varied widely, and further study is needed to determine how ML may be used to minimize screening workloads and identify the sorts of screening jobs most suited to semi-automation. For example, at Pubrica, designing (or modifying) tools based on reviewers' preferences may increase usefulness and uptake (1).

Give yourself the Medical edge today

Each order includes

- On-time delivery or your money back

- A fully qualified writer in your subject

- In-depth proofreading by our Quality Control Team

- 100% confidentiality, the work is never re-sold or published

- Standard 7-day amendment period

- A paper written to the standard ordered

- A detailed plagiarism report

- A comprehensive quality report